Data aggregator from the largest crypto exchanges

.jpg)

An American fintech company turned to Sibedge with a request to develop a highly loaded system capable of collecting information about trading and quotes from the world’s largest crypto exchanges in real-time.

Why collect data from crypto exchanges

The collected data makes the crypto market more transparent, contributes to the formation of fair prices, and is used for reporting to regulatory authorities. In addition, the collected information can be sold to brokers, among whom it is in high demand.Some countries tax the purchase and sale of cryptocurrencies, so market participants have to report to regulators. In the US, these are the Financial Crimes Enforcement Network, The United States Securities and Exchange Commission, Commodity Futures Trading Commission, and other regulatory bodies.

System requirements

Before turning to Sibedge, the client leased a third-party solution due to many inconveniences, such as slow data collection, long delays between data collection and delivery, and difficulty making changes to someone else’s product. They decided to develop their own system to avoid renting and depending on third parties.The need to address these challenges determined the requirements for the system:

- Collection and delivery of data within one minute.

- Minimal effort to add new crypto exchanges.

- The system must process up to 600k events per second from one exchange.

- Support for WebSocket and REST API for receiving data.

- Future plans to connect up to 100 crypto exchanges to the system.

Separately, avoiding data loss during aggregation was a high priority for the project. Consolidating all received data into a single unified format is necessary to efficiently process and analyze the collected information because exchanges can use different identifiers of the same cryptocurrency.

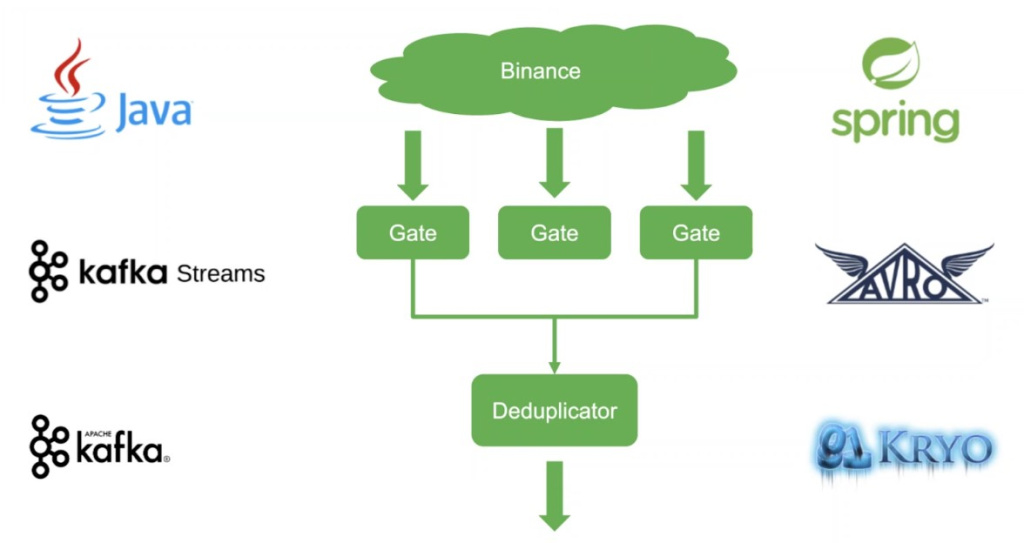

Binance is the first exchange to be connected to the system. Considering that, in the future, the planned number of exchanges will increase to 100, it is necessary to lay the initial architecture to accommodate scaling. The system must work under huge loads because each crypto exchange conducts hundreds of thousands of transactions per second.

Architecture and technology

Web connections with the crypto exchange are extremely unstable. There is a limit on the number of requests, and the amount of traffic may work, or a spontaneous disconnection may occur. To increase the reliability of the system, the connection occurs through several Gate components, which retrieve copies of the same data from the exchange. This parallel configuration avoids the loss of valuable information in the event of a break in one or several channels at once.

After the data is retrieved, it is passed to the Deduplicator component. Its main task is to discard duplicate messages. For example, if data arrives in three streams, Deduplicator passes only one of the three duplicate events through itself. We will not lose important information if one or two Gate components fail since it will go to Deduplicator through the third channel. The collected data is transferred to Kafka. Then, the client’s analysts work with the information.

Sibedge experts carefully selected the technologies that were suitable for the system. Achieving maximum performance was necessary to cope with the enormous workload the system would deal with. As a result, the team settled on Java, Spring Framework, Kafka, Kafka Streams, Apache Avro, and Kryo—the technologies that demonstrated the best performance and reliability during stress tests.

Development

Crypto exchange emulatorIt was necessary for the client to see the practical feasibility of creating such a system. The current load of modern crypto exchanges does not exceed 40,000 events per second. But the Sibedge engineers’ goal was to achieve processing 600,000 events per second from each exchange. Such a large margin is needed so that when the number of exchange users increases in the future, the system will continue to work properly.

To conduct stress tests, a crypto exchange emulator was written, which helped the team study the system’s behavior under the necessary target loads. Developers got the opportunity to change the number of events per second, simulate web connection breaks, experiment with the number of data reading threads, and much more.

Transfer and data format

Any data exchange is associated with the processes of serialization (transmission) and deserialization (reception). During serialization, data is converted into a bit sequence and transferred between system components, after which deserialization begins—the bits are again converted into information that is convenient for applications and personnel to work with.

Crypto exchanges use the JSON data format. It has a simple and human-friendly structure, is easy to edit, and is maximally cross-platform. Of the many solutions for parsing JSON, developers had to choose the optimal one by trial and error.

Performance optimization

Initially, the developers chose the Jackson Java library to achieve their goals: it is fast, flexible, and easy to get started with it. But in practice, it turned out that the system’s throughput was not enough. The bottleneck was the serialization of messages when writing to a file and sending them to Kafka and the deserialization of JSON messages from the exchange.

The DSL-JSON library has replaced Jackson. Instead of using generic methods, it generates unique methods for each specific JSON structure. Its use made it possible to achieve an additional gain in speed—up to 66% in serialization and 40% in deserialization. But in the end, it was decided to abandon it.

Further optimization led the developers to decide to transfer data not in the form of text but in a binary format. They did not use the binary format for deserialization since the developers could not control the format of the data coming from the exchange. We decided to use the Apache Avro framework (serialization to Kafka) and Kryo (serialization to files). Compared to Jackson, serialization accelerated by 3.42 times.

In parallel, studies were carried out to achieve maximum performance when deserializing JSON messages from the exchange. This process consumed most of the CPU’s computing power. The weak point of library solutions is the orientation towards the most general case. They are convenient to use, but the maximum performance is severely limited.

As a result, the developers came to the decision to write their own parser, which does not use third-party libraries to work with JSON. This choice allowed us to achieve maximum performance under the following conditions:

- Deprecation of Unicode support in favor of ASCII characters.

- Messages from exchanges must be well-formed to eliminate the cost of checking the text structure.

- The message format must be known in advance.

Now, additional opportunities opened up for optimization. The parser allows you to quickly search for the desired data by one character, determine the property name in JSON by one or two characters instead of fully parsing the name, and also provides the ability to “predict” the length of the desired message section.

To connect new exchanges to the system, you need to write parsers from 100-500 lines of Java code. They remove all unnecessary information from the received messages and transfer only the necessary data to the system, which significantly increases performance. This approach proved to be 1.2x faster than DSL-JSON and 3x faster than Jackson.

Result

The developers achieved a peak load of 400,000 events per second from one exchange. This result is still far from the target of 600,000 but well above Binance’s current target of 40,000 events. Deduplicator initially processed 800,000 events—not enough, given that the data came from multiple Gate components. Its code was completely rewritten, which allowed it to reach a peak load of 2,500,000 events per second.The client decided to dwell on the achieved results, believing that such a reserve of system throughput would be enough for the coming years. In December 2022, after the necessary infrastructure was prepared, the aggregator was put into operation and connected to four major crypto exchanges.

The system continues to improve and acquire new features. For example, Sibedge engineers are developing a code generator that automatically creates parsers for new exchanges. In the future, the optimization process will continue to increase the stress resistance of the solution under gradually increasing loads. The plan for the near future is to connect several new exchanges to the system.